Researchers Say an AI-Powered Transcription Tool Used in Hospitals Invents Things No One Ever Said

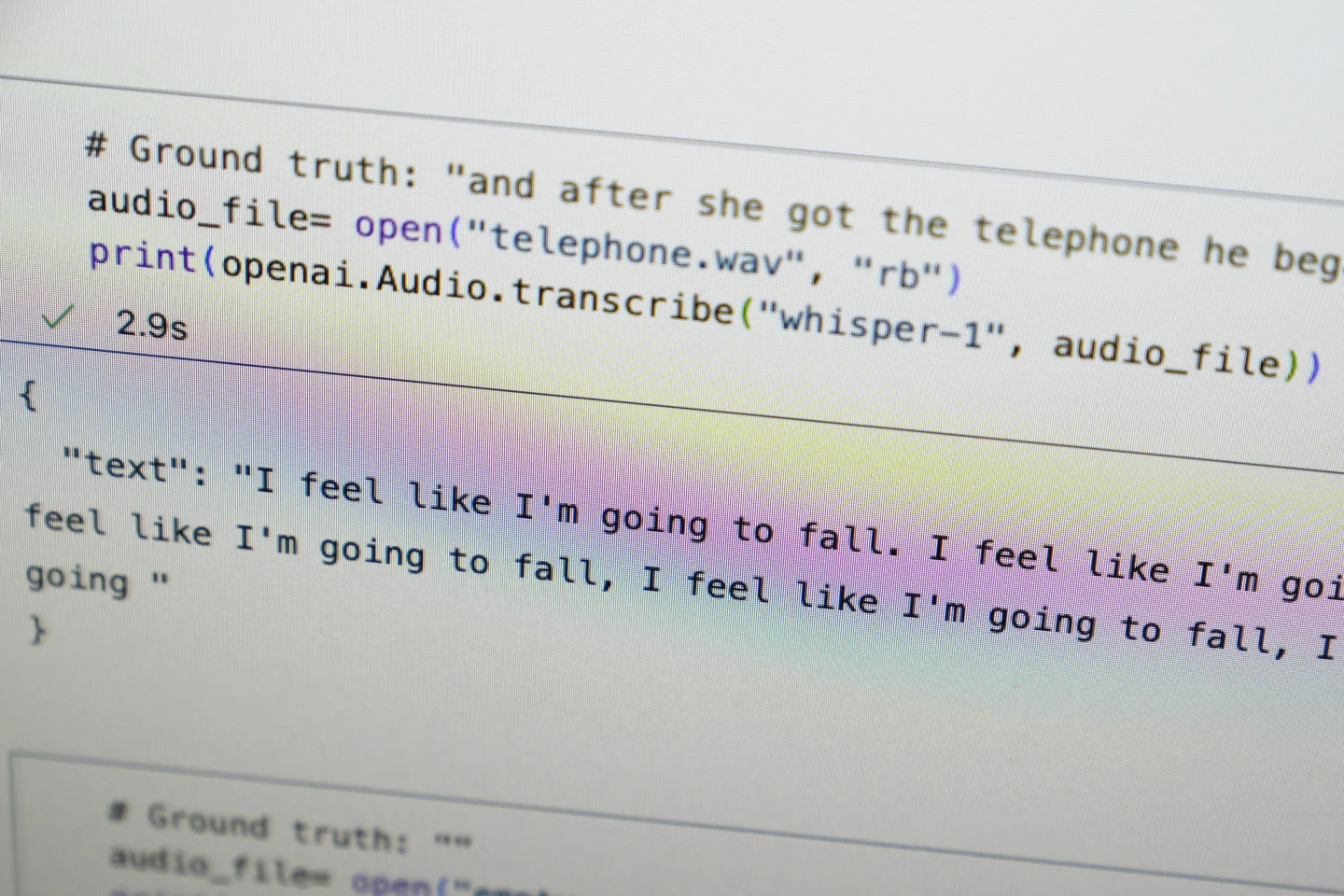

OpenAI’s AI transcription tool, Whisper, once promoted as offering “human-level robustness and accuracy,” is facing mounting criticism for producing fabricated text in its transcriptions. Known as “hallucinations” in the tech industry, these imagined words and phrases have raised alarms, especially as the tool finds increasing use in hospitals, where accuracy is critical.

Whisper’s Hallucinations: What Are They?

Reports from over a dozen software engineers, developers, and researchers reveal that Whisper’s hallucinations can be troubling, sometimes adding racial commentary, violent language, or even fictional medical advice to transcriptions. Such errors pose serious risks as Whisper expands across industries to transcribe interviews, generate subtitles, and assist in other sensitive areas.

Whisper in Hospitals: A High-Stakes Issue

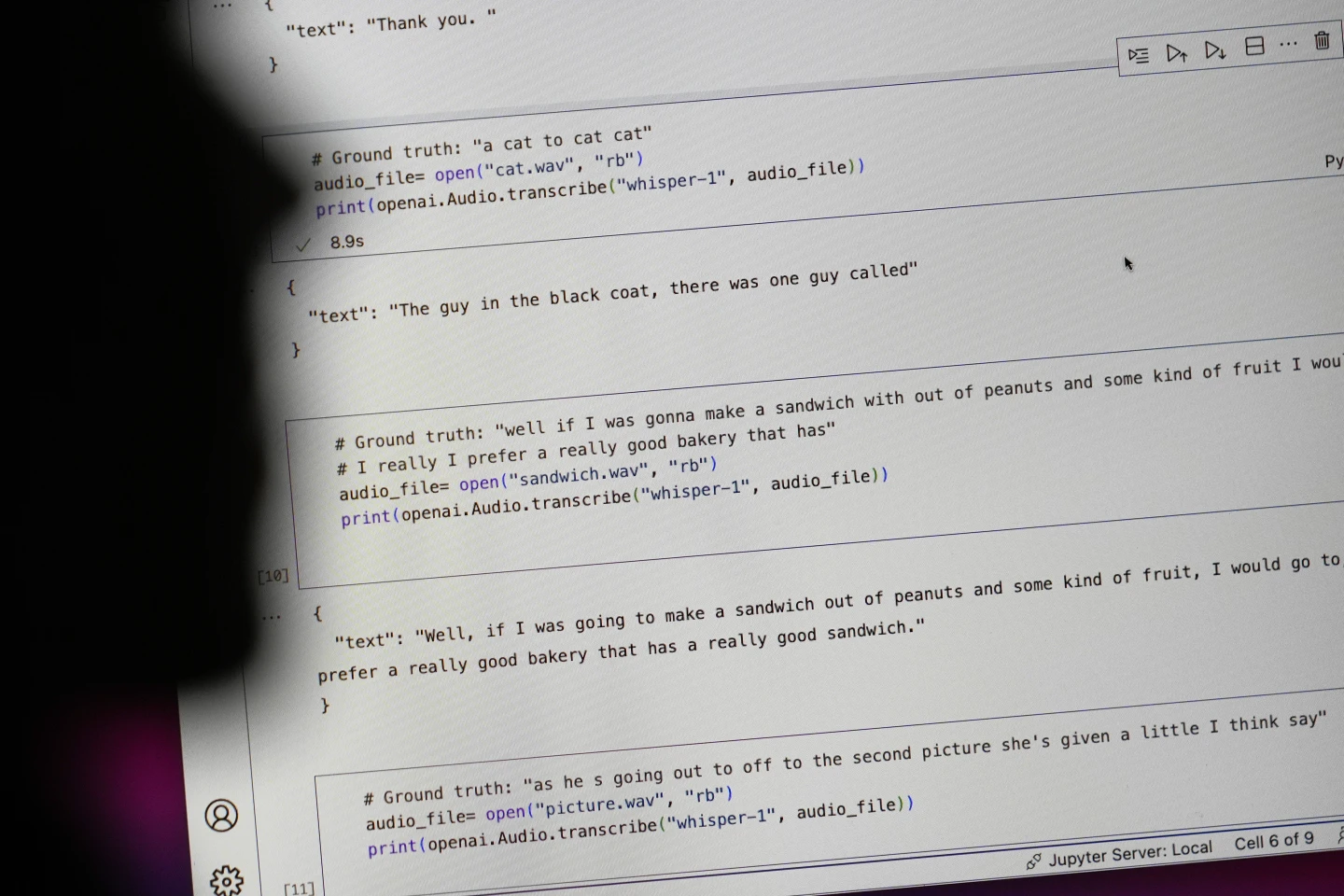

The medical field’s adoption of Whisper-based tools has stirred particular concern. Many hospitals are rushing to use Whisper for patient-doctor consultations despite OpenAI’s caution against applying the tool in “high-risk domains.” This push comes despite documented issues, as noted by a University of Michigan researcher who discovered hallucinations in eight out of every ten audio transcriptions in his study of public meetings.

Researchers Report Frequent Errors in Whisper Transcriptions

A machine learning engineer identified hallucinations in nearly half of over 100 hours of audio transcriptions. Another developer reported issues in almost all of the 26,000 transcripts he analyzed. Even high-quality audio samples aren’t immune—computer scientists found 187 instances of fabricated text among 13,000 recordings in a recent study.

The Potential Scale of Whisper’s Errors

This trend, researchers warn, could lead to tens of thousands of flawed transcriptions, making it clear that Whisper’s inaccuracies are not a rare glitch but a systemic problem. For now, the risks in deploying Whisper for critical tasks, particularly in healthcare, remain a pressing concern in the AI industry.